What is Performance Testing?

Performance Testing checks the speed, response time, reliability, resource usage, scalability of a software program under their expected workload. The purpose of Performance Testing is not to find functional defects but to eliminate performance bottlenecks in the software or device.

The focus of Performance Testing is checking a software program's

- Speed - Determines whether the application responds quickly

- Scalability - Determines maximum user load the software application can handle.

- Stability - Determines if the application is stable under varying loads

Performance Testing is popularly called “Perf Testing” and is a subset of performance engineering.

Why do Performance Testing?

Features and Functionality supported by a software system is not the only concern. A software application's performance like its response time, reliability, resource usage and scalability do matter. The goal of Performance Testing is not to find bugs but to eliminate performance bottlenecks.

Performance Testing is done to provide stakeholders with information about their application regarding speed, stability, and scalability. More importantly, Performance Testing uncovers what needs to be improved before the product goes to market. Without Performance Testing, software is likely to suffer from issues such as: running slow while several users use it simultaneously, inconsistencies across different operating systems and poor usability.

Performance testing will determine whether their software meets speed, scalability and stability requirements under expected workloads. Applications sent to market with poor performance metrics due to nonexistent or poor performance testing are likely to gain a bad reputation and fail to meet expected sales goals.

Also, mission-critical applications like space launch programs or life-saving medical equipment should be performance tested to ensure that they run for a long period without deviations.

Only for a 5-minute downtime of Google.com (19-Aug-13) is estimated to cost the search giant as much as $545,000.

It's estimated that companies lost sales worth $1100 per second due to a recent Amazon Web Service Outage.

Hence, performance testing is important.

Types of Performance Testing

Load testing: checks the application's ability to perform under anticipated user loads. The objective is to identify performance bottlenecks before the software application goes live.

Stress testing: involves testing an application under extreme workloads to see how it handles high traffic or data processing. The objective is to identify the breaking point of an application.

Endurance testing: is done to make sure the software can handle the expected load over a long period of time.

Spike testing: tests the software's reaction to sudden large spikes in the load generated by users.

Volume testing: Under Volume Testing large no. of. Data is populated in a database and the overall software system's behavior is monitored. The objective is to check software application's performance under varying database volumes.

Scalability testing: The objective of scalability testing is to determine the software application's effectiveness in "scaling up" to support an increase in user load. It helps plan capacity addition to your software system.

Performance testing metrics:

A number of performance metrics, also known as key performance indicators (KPIs), can help an organization evaluate current performance compared to baselines.

Performance metrics commonly include:

Throughput: how many units of information a system processes over a specified time;

Memory: the working storage space available to a processor or workload;

Response time, or latency: the amount of time that elapses between a user-entered request and the start of a system's response to that request;

Bandwidth: the volume of data per second that can move between workloads, usually across a network;

CPU interrupts per second: the number of hardware interrupts a process receives per second.

These metrics and others help an organization perform multiple types of performance tests.

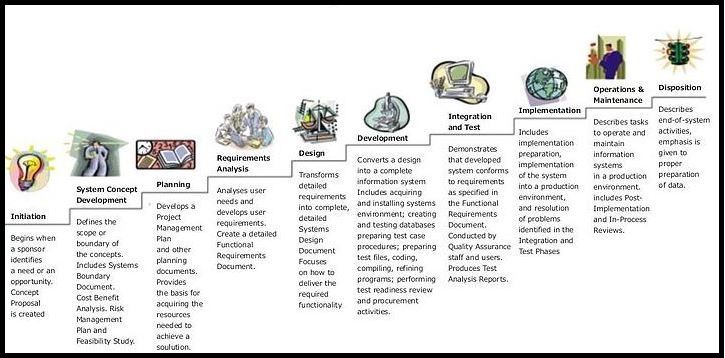

Performance Testing Process:

The methodology adopted for performance testing can vary widely but the objective for performance tests remain the same. It can help demonstrate that your software system meets certain pre-defined performance criteria. Or it can help compare the performance of two software systems. It can also help identify parts of your software system which degrade its performance.

Below is a generic process on how to perform performance testing

Identify your testing environment: Know your physical test environment, production environment and what testing tools are available. Understand details of the hardware, software and network configurations used during testing before you begin the testing process. It will help testers create more efficient tests. It will also help identify possible challenges that testers may encounter during the performance testing procedures.

Identify the performance acceptance criteria: This includes goals and constraints for throughput, response times and resource allocation. It is also necessary to identify project success criteria outside of these goals and constraints. Testers should be empowered to set performance criteria and goals because often the project specifications will not include a wide enough variety of performance benchmarks. Sometimes there may be none at all. When possible finding a similar application to compare to is a good way to set performance goals.

Plan & design performance tests: Determine how usage is likely to vary among-st end users and identify key scenarios to test for all possible use cases. It is necessary to simulate a variety of end users, plan performance test data and outline what metrics will be gathered.

Configuring the test environment - Prepare the testing environment before execution. Also, arrange tools and other resources.

Implement test design: Create the performance tests according to your test design.

Run the tests - Execute and monitor the tests.

Analyze, tune and retest: Consolidate, analyze and share test results. Then fine tune and test again to see if there is an improvement or decrease in performance. Since improvements generally grow smaller with each retest, stop when bottle-necking is caused by the CPU. Then you may have the consider option of increasing CPU power.

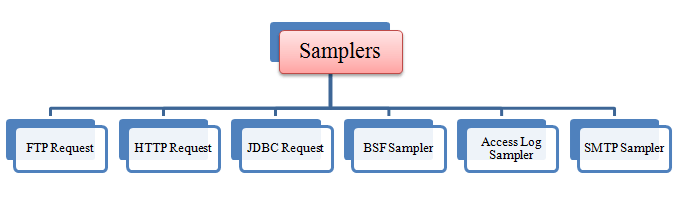

Many tools are available in market for Performance Testing:

Apache JMeter

WebLOAD

LoadUI Pro

LoadView

NeoLoad

LoadRunner

Silk Performer

.png)

.png)

.png)

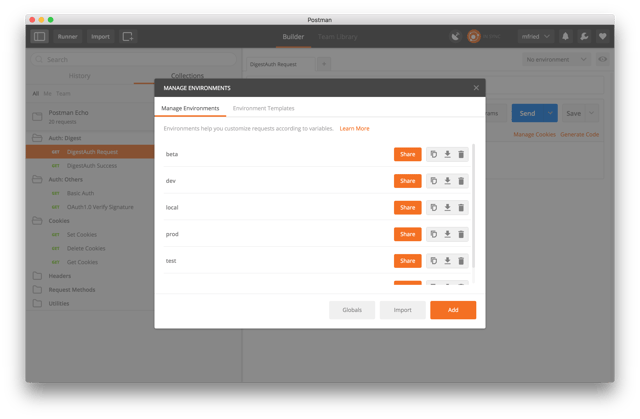

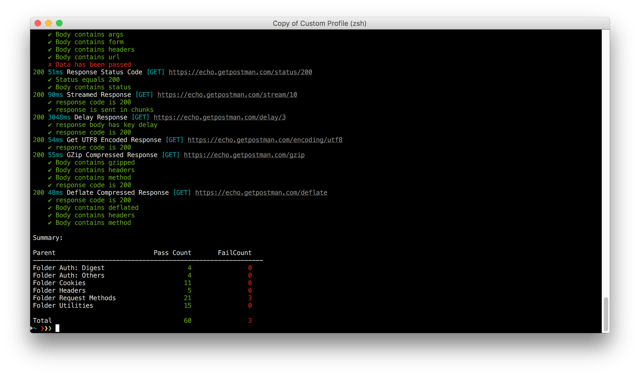

More Details about POSTMAN use this link

More Details about POSTMAN use this link